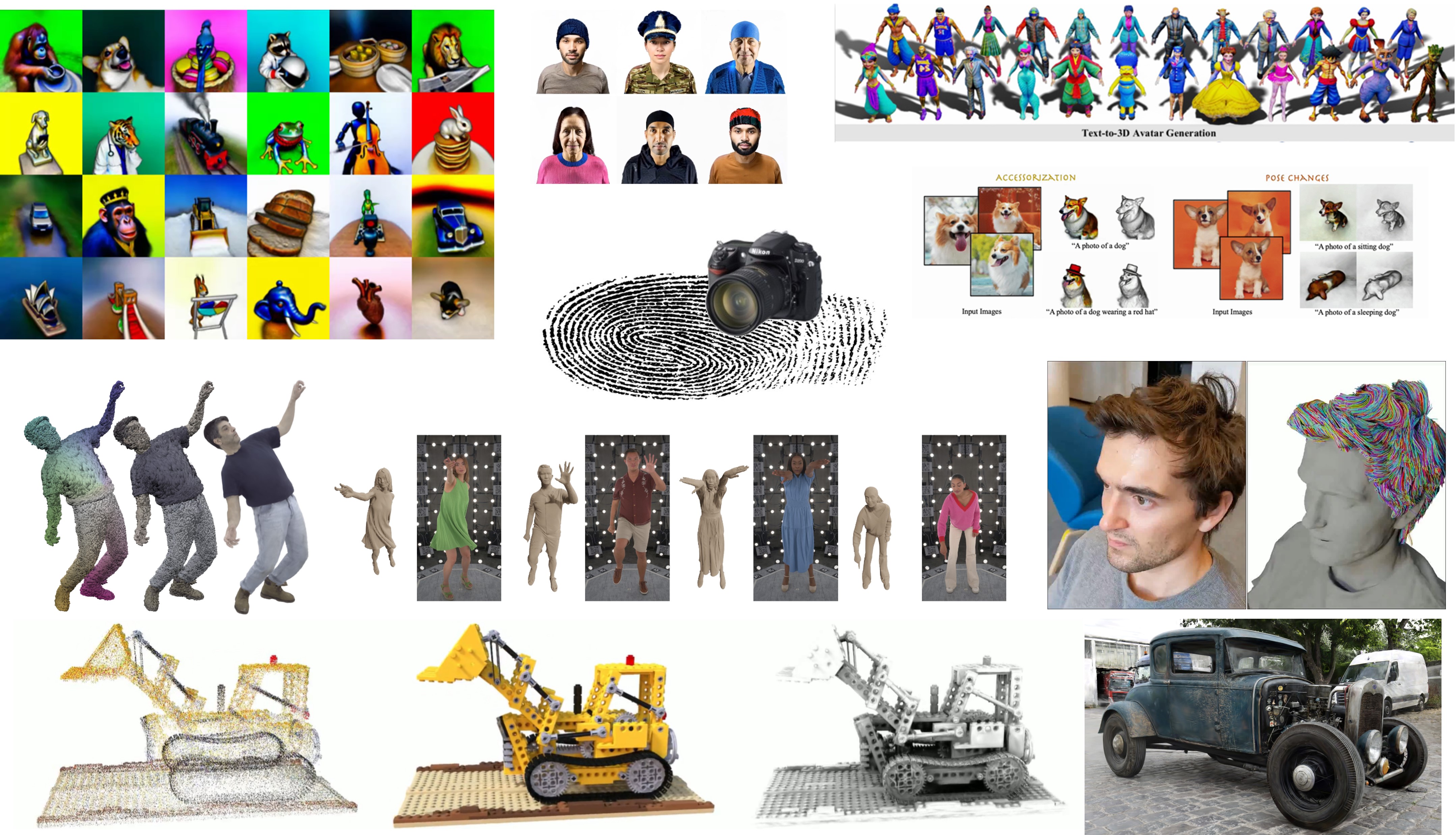

Lecture: AI-based 3D Graphics and Vision

|

LecturerJustus Thies |

Teaching AssistantsJalees Nehvi |

|---|

Content

The lecture will cover AI-based 3D reconstruction from various input modalities (Webcams, RGB-D cameras (Kinect, Realsense, …). The lecture builds upon the classical 3D reconstruction methods discussed in the ‘3D Scanning & Motion Capture’ lecture and shows how components and data structures of those methods can be replaced or extended by methods from AI. It will start with basic concepts of 2D neural rendering, including methods like Pix2Pix, Deferred Neural Rendering and alike. Then, more advanced topics like 3D/4D neural scene representations are discussed. To train those representations, differentiable rendering needs to be understood. The lecture will introduce methods for static and dynamic reconstruction with different levels of controllability and editability. ++ Concept of 2D Neural Rendering ++ Deferred Neural Rendering, AI-based Image-based Rendering. ++ 3D Neural Rendering and Neural Scene Representations ++ Neural Radiance Fields (NeRFs) ++ Neural Point-based Graphics, Gaussian Splatting ++ Differentiable Rendering (Rasterization, Volume Rendering, Shading) ++ Relighting and Material Reconstruction ++ DeepFakes ++ Outlook: detection of synthetic media

Aim

Basic understanding of how methods of AI can be used for 3D capturing of objects, scenes, and humans. It includes the principles of 2D and 3D neural rendering, as well as the differentiable rendering (Inverse Graphics).