CVPR 2020: Tutorial on Neural Rendering

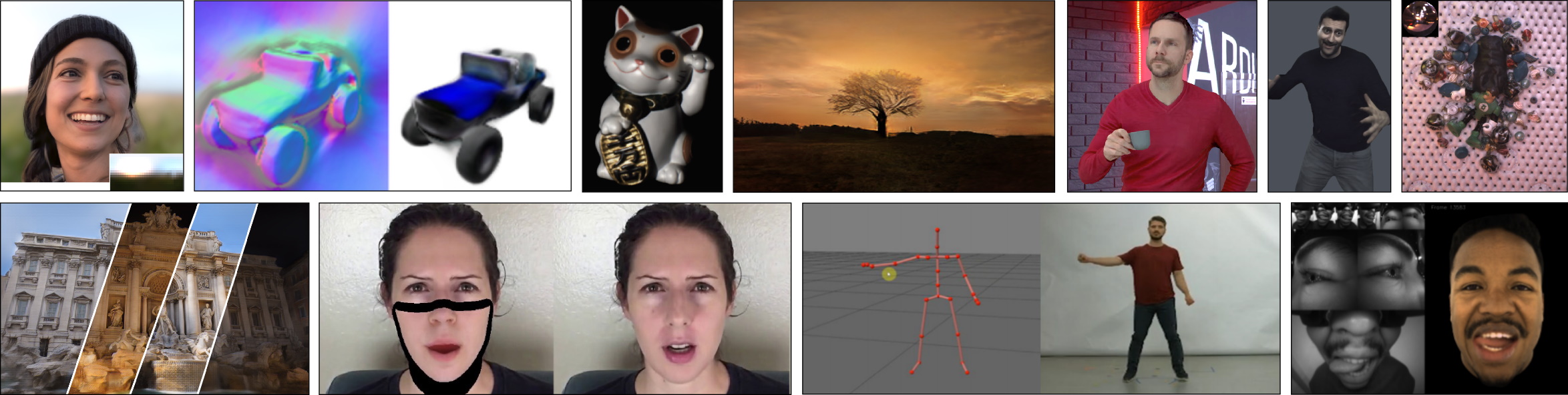

Neural rendering is a new class of deep image and video generation approaches that enable explicit or implicit control of scene properties such as illumination, camera parameters, pose, geometry, appearance, and semantic structure. It combines generative machine learning techniques with physical knowledge from computer graphics to obtain controllable and photo-realistic outputs. This tutorial teaches the fundamentals of neural rendering and summarizes recent trends and applications. Starting with an overview of the underlying graphics, vision and machine learning concepts, we discuss critical aspects of neural rendering approaches. Specifically, our emphasis is on what aspects of the generated imagery can be controlled, which parts of the pipeline are learned, explicit vs.~implicit control, generalization, and stochastic vs.~deterministic synthesis. The second half of this tutorial is focused on the many important use cases for the described algorithms such as novel view synthesis, semantic photo manipulation, facial and body reenactment, relighting, free-viewpoint video, and the creation of photo-realistic avatars for virtual and augmented reality telepresence. Finally, we conclude with a discussion of the social implications of this technology and investigate open research problems.

[Paper] [Video] [Bibtex]